If you can't see the app, please give the server some time to load it up.

20th April 2021

This application was built with the purpose of bringing together and demonstrating a series of natural language processing (NLP) techniques that are commonly used to solve data science problems related to unstructured text data.

The following NLP techniques are demonstrated in this application:

The application will apply these techniques on text data extracted from a newspaper article (simply enter the URL of the article), and the code for this project be found here.

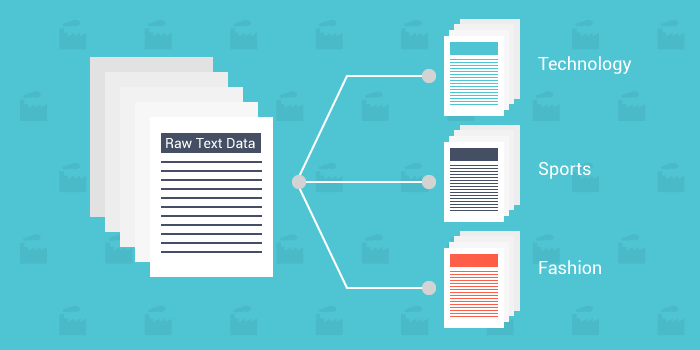

Text Classification is typically a supervised learning problem that aims to build a classification model that can correctly predict the ground truth categories of new text data based the training data used to model it. For this application, I've focused on classifying the genre of the newspaper article entered. For this application, the model was trained on BBC news article data, where each article is allocated a genre: tech, business, politics, sport or entertainment. Thus, the model is only able to associate articles with one of these 5 categories.

Photo credits: towards data science

Steps 1, 4 and 5 are standard processes in the machine learning pipeline.

Step 2, cleaning the data, is different to typical data science problems because of the unstructure nature of text data. This step often involves removing HTML tags, lemmatization, removing special characters, stopwords and accents. This process effectively removes any unwanted noise that could cause overfitting of the model.

Step 3, text vectorization or word embedding generation, involves turning the text data into numerical values for model training. Vectorization is often implemented through a count-based method, such as BoW or TF-IDF, which count the frequency of occurences of a single word in the corpus' vocabulary for each individual article. Whilst word embedding leverage a deep learning method that creates dense vector representations of words that capture contextual and semantic similarities between words. This method allows you to tune the size of word embedding vectors, making the dimension of the vector space much smaller than BoW or TF-IDF. For this application, I simply used the TF-IDF method to prepare the text data for training.

Once these steps are complete, the final model can be serialized, for example as a pickle file, and then imported in the Flask application during a POST request.

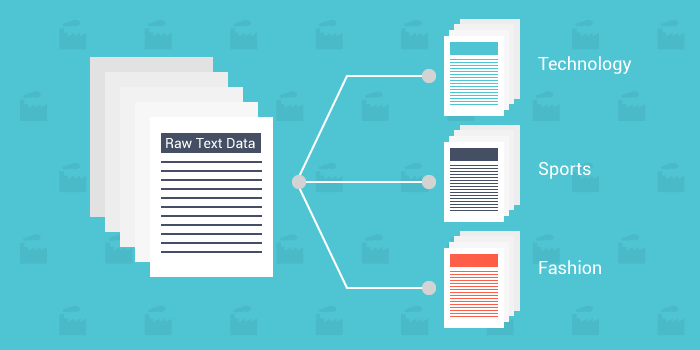

Sentiment Analysis is a technique used to determine whether data contains a positive, negative or neutral position in the text. This is often performed on textual data such as reviews, questionnaires, customer feedback, etc. to monitor brand and product sentiment to furthuer understand customer needs.

Photo credits: Monkey Learn

There are generally two approaches for building a model for sentiment analysis:

Here I've taken the unsupervised lexical approach to the problem, making use of the vocabulary of a predefined lexicon which is associated with a set of sentiment scores. Different lexicons can be trained on different types of text data, for example, social media posts, Wikipedia articles, etc. Thus, using a lexicon that is most closely related to the text data you are analysis can greatly improve the performance of your results.

The AFINN lexicon is used here, one of the most popular lexicons available for sentiment analysis. This lexicon is trained on text data from microblogs contains over 3,300 words with a polarity score associated with each word. The AFINN vocabulary is used to match up words with a sentiment score based on their contextual and semantic structure in the body of text.

Text summarization is a technique used to take a text document and return a few sentences that accurately summarize its contents. The main objective is to perform this summarization in an automated fashion by applying mathematical and statistical techniques to the contents and context of the text.

Photo credits: WordLift

There many ways to approach text summarizaion (e.g. LSA, SVD, etc.), but for this application, I've implmented the TextRank algorithm. This is a graph-based ranking algorithm that ranks sentneces based on their importance. The sentences can be represented as vertices that are linked together by edges. This forms a ranking system where the importance of a sentence is decided based on the number of edges connected to it, as well as the importance of other sentences connected to it. Once the sentences are ranked, they can be used to extract the top N sentences that seem to summarize the text.

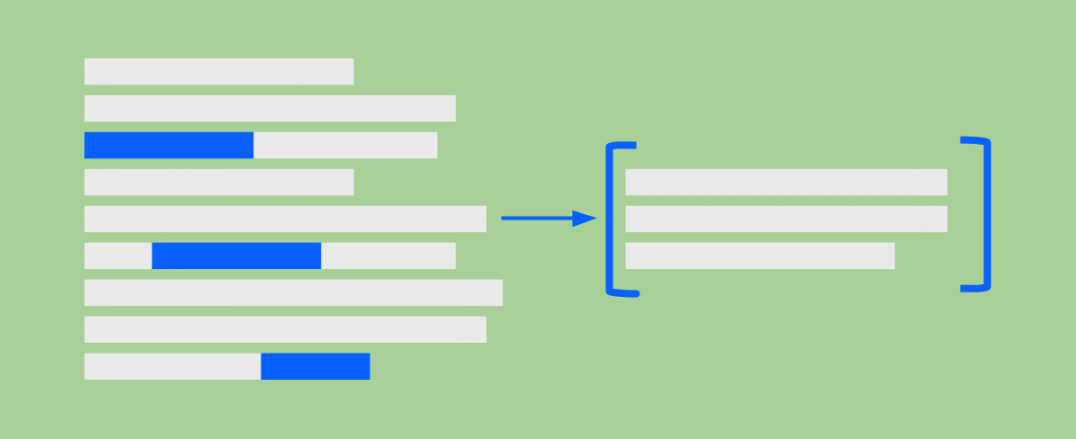

Information retrieval is a very exciting area in the field of natural language processing. In short, it focuses on retrieving information based on user input queries by ranking the search results and selecting the best response. Here, I've used BERT, which is an open-source library created by Google that applies bidirectional training of a Transformer, a popular attention model, to language modelling, giving a deeper sense of language context and flow than single-direction language models.

Keyword extraction is a subset of keyphrase extraction, which is the process of extracting key terms or phrases from a body of unstructured text so that the core themes are captured. But for the sake of simplicity, I've employed Gensim's keywords function which uses a variation of the TextRank algorithm.